Microsoft has reshaped their update cadence for Microsoft 365 applications, and product owners need to adjust their testing and release strategies accordingly.

- Shorter support windows: The Semi-Annual Enterprise Channel now has only eight months of support (down from fourteen), giving IT teams less time to validate and stabilize builds.

- More frequent updates: Most interactive,end-user systems are expected to move to the Monthly Enterprise or Current Channels.

- Longer rollback: The Monthly Enterprise Channel now provides a two-month rollback option (up from one), creating a small buffer to mitigate risk.

The long runway that enterprises once relied on for regression testing and validation is narrowing. To keep pace without compromising stability, end-user computing and Microsoft 365 product owners should embrace automation and modern release validation practices.

The Solution: Automate, Validate, and Stay Confident

Login Enterprise can help IT teams build confidence and preserve end-user experience, through:

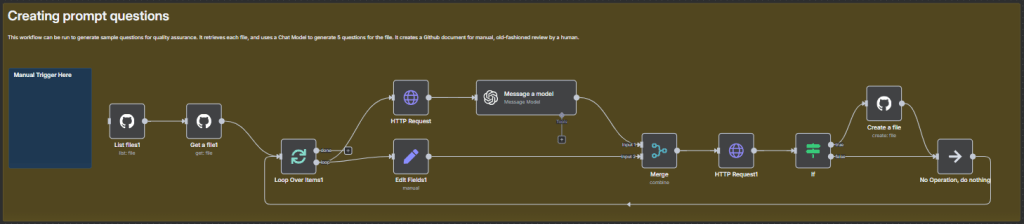

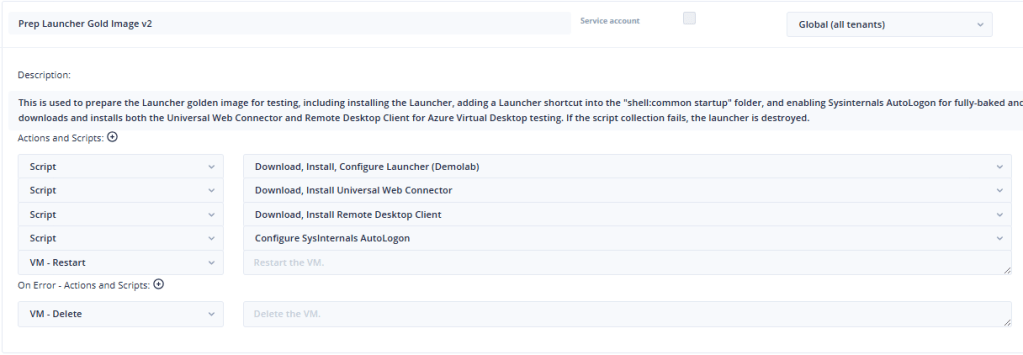

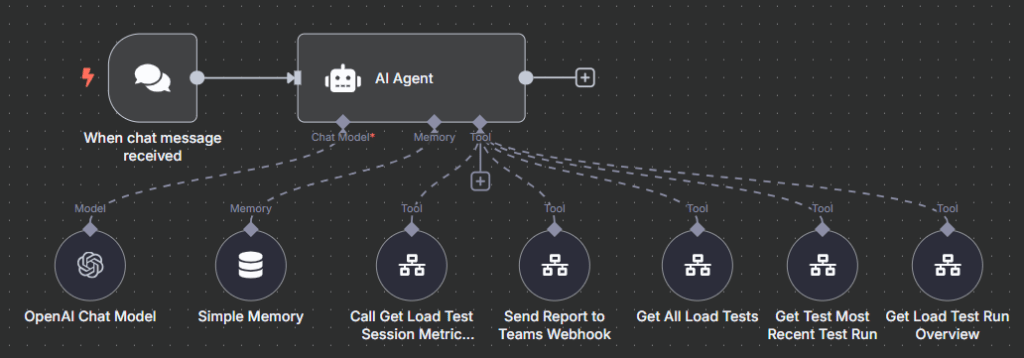

- Automated regression testing: Application testing can simulate end-user workflows across Microsoft 365 applications, including macros or complex VBScript in Excel.

- Continuous validation: Supports integration with CI/CD pipelines via API, enabling frequent, repeatable tests.

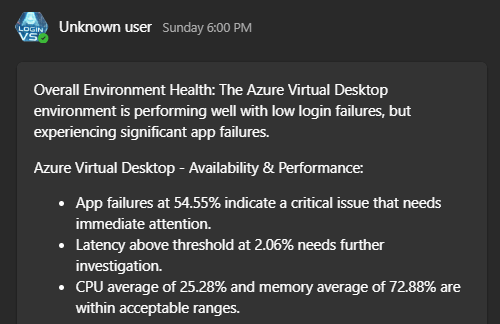

- Actionable insights: Detailed reporting and customized alerting helps IT identify broken workflows and prioritize fixes`

Login Enterprise allows end-user computing teams to proactively validate Microsoft 365 updates, reduce downtime, and maintain confidence, even as updates accelerate.

Examples: Excel Testing at Scale

The screenshot below shows Microsoft Excel and an example spreadsheet with custom VBScript exposed through buttons. This simplified setup mirrors how many enterprise teams manage quality control for critical Excel customizations today.

In a traditional quality assurance cycle, testers manually step through each Excel use case, documenting results and timing by hand.

With Login Enterprise, the same process is automated, repeatable, and scalable. The example Application Test report below shows not only that each test case was executed successfully but also captures the runtime of every step. These results can be easily shared with a Change Advisory Board (CAB) or business stakeholders, translating technical findings into clear insights on risk and stability.

The Takeaway: Agility Requires Automation

While this new release cycle requires new management processes, there is an opportunity to rethink how enterprises approach change management altogether. Here are some things to consider:

- Testing timelines are shrinking: An accelerated Microsoft 365 cadence means IT can no longer rely on longer validation windows.

- Agility requires automation: Continuous integration and validation should be treated as core components of end-user computing strategy.

- Reporting must bridge the tech gap:Clear data-driven reporting is meaningful to both IT and stakeholders to help inform risk decisions at the CAB.

If you have any questions or enjoyed the blog, feel free to reach out or drop a like!